- Home

- >

- Research

- >

- Focus Areas

- >

- Robot Perception & Manipulation

Robot Perception & Manipulation

Robotic systems can process images and video far faster than humans. Using that advantage in “perception” has paved the way for breakthroughs in security, surveillance and related activities. Pairing that perception with manipulation enables robots to become “butlers” that can interpret and organize a cluttered environment for helping an aging population tidy their homes and accomplish other tasks that will help them continue to live independently.

How does this work?

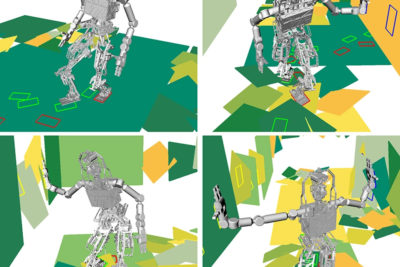

Understanding what the robot sees in the world is a significant challenge. The environmental lighting conditions vary, motion in the world is very complex, and there is a huge amount of data to process in the video. Perception research investigates mathematical models and advanced computer algorithms to perceive the world. Researchers in UM Robotics investigate both physical models of perception as well as data-driven, machine learning models.

Computerized “deep neural networks,” modeled after human brain activity, have become proficient at perceiving and identifying commonly occurring activities, including walking and running people. They work less well, however, for uncommon activities, such as people falling on a sidewalk near the street. U-M researchers are working on technologies that will allow machines to do what comes naturally to people, who have an exceptional ability to react appropriately to new phenomena with few or no previous examples and little explanation to work from.

To do so, computers must process and “understand” huge amounts of data quickly and efficiently. To understand video content, researchers have formulated methods for processing pixels within volumes of space and time rather than through a sequence of frames. This leads to a more difficult modeling and computational problem, but yields more robust output.

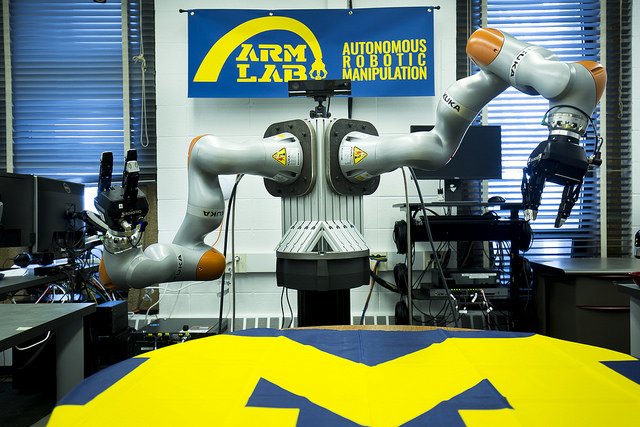

Robotics also needs to improve at another set of skills humans pick up naturally, as infants: physical manipulation. Michigan engineers have developed a Fetch robot that has learned how to pick up objects and move them around based on a simple demonstration from a human. But for the Fetch robot to do more fully mobile manipulation, it will need to see the world around it much better than it does now. It will need to know what it can do with every object it sees, whether it is the handle of a door or cup, each having its own function.

But what if a robot tried to do something more complicated, like making breakfast? The robot would need to reason through all the different actions it would need to take with various objects. And all of these actions form a chain that together that can achieve a larger task. The next generation of robots will need to attain a level of reasoning that allows them to understand and reliably respond to the new objects that it sees in the real world.

Robotics Faculty doing Robot Perception & Manipulation research include: